|

Wolfram Research's Mathematica - come see at Supercomputing 2009!

Supercomputer-style Cluster Computing Support for Mathematica

| The new Supercomputing Engine for Mathematica (SEM, a.k.a. the PoochMPI Toolkit) enables Wolfram Research's

Mathematica to be combined with the easy-to-use,

supercomputer-compatible Pooch clustering technology of Dauger Research.

This fusion applies the parallel computing paradigm of today's

supercomputers and the ease-of-use of Pooch to Mathematica, enabling

possibilities none of these technologies could do alone.

|

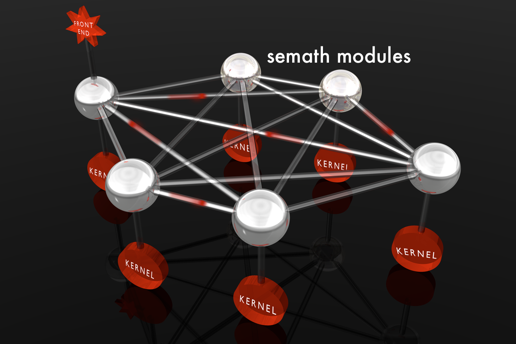

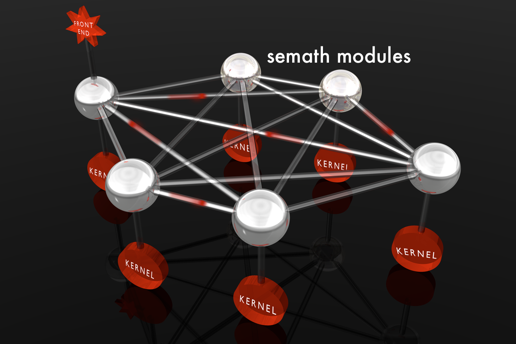

Closely following the supercomputing industry-standard Message-Passing

Interface (MPI), the Supercomputing Engine creates a standard way for every

Mathematica kernel in the cluster to communicate with each other

directly. In contrast to typical grid implementations that are solely

master-slave or server-client, this solution instead has all kernels

communicate with each other directly and collectively

the way modern

supercomputers do.

|  |

|

|

As they do for other cluster applications, Pooch and MacMPI provide the

support infrastructure to enable this supercomputing-style parallel

startup and inter-kernel communication. After locating, launching, and

coordinating Mathematica kernels on a cluster, the Supercomputing Engine

creates and supports an "all-to-all" communication topology,

which

high-performance computing practitioners find necessary to address the

largest problems in scientific computing since the earliest large

supercomputers, all within the Mathematica computing environment.

Besides locating, launching, and coordinating Mathematica kernels on a

cluster, the Supercomputing Engine provides an API that closely follows the

MPI standard appropriate for use in Mathematica. Enabling Mathematica

kernels to be harnessed together the way supercomputers are, this fusion

of Mathematica, Pooch, and MacMPI makes problems accessible that never

were before.

|

|

|

By truly running Mathematica in parallel, its power can be combined with

Pooch's ease of use and reliability in high-performance computing. This

new technology, as Pooch has done before, will further enhance the power

of both clusters and Mathematica for its users.

The Supercomputing Engine for Mathematica was produced via a collaboration between

Dauger Research, Inc.,

and Advanced Cluster Systems, LLC.

Parallelization of Other Applications

For further parallelization of other applications like

iMovie, iDVD, Final Cut Pro, or DVD Studio Pro, please fill out

our petition page to encourage its developers to

parallelize their code or you may base your messages on our petition.

Unprecedented Capabilities

Existing Mathematica users can take on larger problems:

- Problems that take too long (days or weeks to perform) on one node can be performed in less time

- Problems that require more RAM than one node can hold

become possible to perform using all the RAM on the cluster

Experienced MPI users can address problems no cluster could do before:

- Cluster computing is not just for numerics anymore

- Symbolic manipulation and processing inconvenient or impractical to do in Fortran and C

at a scale only clusters can handle

Developing MPI users can prototype parallel codes:

- Use the approachable environment of Mathematica to learn how to program clusters and supercomputers

- Develop new codes and algorithms for parallel computing faster combining Mathematica's

rich feature set with MPI

|

Documentation:

Supercomputing Engine for Mathematica Manual

(2.5 MB download in PDF)

Supercomputing Engine for Mathematica Manual

(2.5 MB download in PDF)

Containing explanations, code examples,

MPI reference, and installation instructions.

Posters:

Parallel Computation for Complex Linkage Design using SEM

(1 MB)

Parallel Computation for Complex Linkage Design using SEM

(1 MB)

A. Arredondo, J. M. McCarthy, G S. Soh of UCI School of Engineering applied SEM to

linkage design, a computationally-intensive process determining the best physical configuration out of

all possible configurations given

mission criteria and mechanical constraints

| |

|

Pingpong MPI Benchmark - SEM vs. "grid"

(656 kB)

Pingpong MPI Benchmark - SEM vs. "grid"

(656 kB)

A summary of results comparing SEM with a "grid" approach to communication using an adapted

benchmark that tests network bandwidth of supercomputing hardware.

| |

|

|

Requirements:

- Mathematica 6 or later

- Pooch v1.8 or later.

- 100BaseT Ethernet switch or faster

- Macintosh: Intel-based and/or PowerPC-based Macintoshes

running OS X 10.4 or later

- Linux: Intel-based nodes

running 64-bit Linux:

Red Hat 5.3, Ubuntu 9, SUSE Enterprise 10, or later

|

|

Slides:

Video:

Supercomputing Engine for Mathematica Video Presentation

(32:30)

Supercomputing Engine for Mathematica Video Presentation

(32:30)

Video of presentation given at Wolfram Technology Conference 2006

Code Examples:

|

|

mathmpibasics.nb - Basic examples of using MPI within Mathematica including

remote function calls, list manipulation, and graphics generation in a cluster.

mathmpibasics.nb - Basic examples of using MPI within Mathematica including

remote function calls, list manipulation, and graphics generation in a cluster.

parallellife.nb

- An example showing how to parallelize a problem with interdependency. It also happens to be an

example of cellular automata on a cluster within Mathematica;

see the Parallel Life tutorial!

parallellife.nb

- An example showing how to parallelize a problem with interdependency. It also happens to be an

example of cellular automata on a cluster within Mathematica;

see the Parallel Life tutorial!

mathplasma.nb

- An electrostatic plasma simulation implemented in Mathematica along with with a

parallelized version using MPI.

This approach to plasma physics is well-published, for example:

mathplasma.nb

- An electrostatic plasma simulation implemented in Mathematica along with with a

parallelized version using MPI.

This approach to plasma physics is well-published, for example:

• V. K. Decyk, C. D. Norton, Comp. Phys. Communications 164 (2004) 80-85

|

Extends Mathematica

The Supercomputing Engine adds and configures library calls to the Mathematica environment:

- Basic MPI calls for node to node communication (mpiSend, mpiRecv, mpiSendRecv)

- Asynchronous MPI calls to perform work while waiting for messages (mpiIsend, mpiIrecv, mpiTest)

- Collective MPI calls for multi-node communication patters (mpiBcast, mpiGather, mpiAllgather, mpiScatter,

mpiAlltoall, mpiReduce, mpiAllreduce)

- MPI Communicator calls for constructing arbitrary subsets of nodes (mpiCommSize, mpiCommRank, mpiCommSplit)

- High-level parallel calls for commonly used tasks and patterns

(ParallelDo, ParallelTable, ParallelFunction, EdgeCell, ParallelTranspose, ParallelInverse, Elementmanage,

ParallelFourier)

- Parallel I/O for saving and loading data from nodes across a cluster

(ParallelPut, ParallelGet, ParallelBinaryPut, ParallelBinaryGet)

- and more ...

Simplified organizational diagram of Supercomputing Engine for Mathematica.

Communications between semath's can be all-to-all, not just to the nearest neighbors.

Why is using MPI an advantage?

It's an enabling technology.

Designers of the earliest general-purpose parallel computers knew that intercommunication between all

processing elements was necessary in order to address major scientific problems.

This technology provides that kind of support

for Mathematica while most grid solutions implement only communication between one master and many slaves.

It's like employees of a corporation.

Imagine a company

where there is one boss and every employee had a phone that only

called that one boss.

It might work for special cases, but for

modestly complex jobs, the boss would go crazy and the company

wouldn't get very far.

Instead, one installs a telephone

network that allows employees to phone each other.

MPI allows for communication hierarchies too.

Let's say every Wolfram Research employee in the world had a phone that only

called Stephen Wolfram's cell phone, and these employees were not allowed

to talk to anyone but Dr. Wolfram.

That would not work well...

For further reading, a more general discussion about these differences and the importance

of communication in computation is available. Also a

video of the presentation we gave at the Wolfram Technology Conference 2006

is online.

If you have further questions, please

contact us.

Purchasing

You may purchase the Supercomputing Engine for Mathematica at the

Dauger Research Store.

|

Supercomputing Engine for Mathematica Video Presentation

Supercomputing Engine for Mathematica Video Presentation

mathmpibasics.nb

mathmpibasics.nb